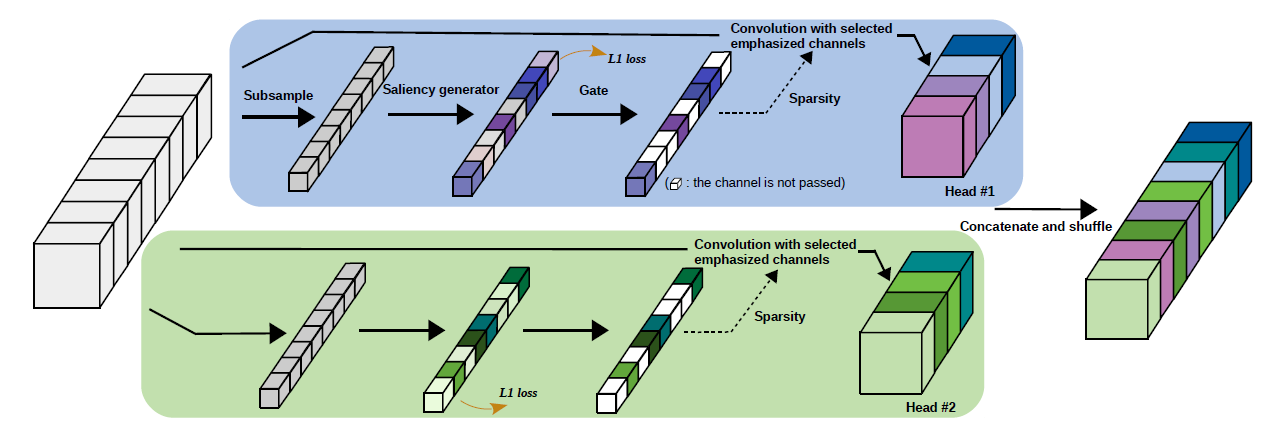

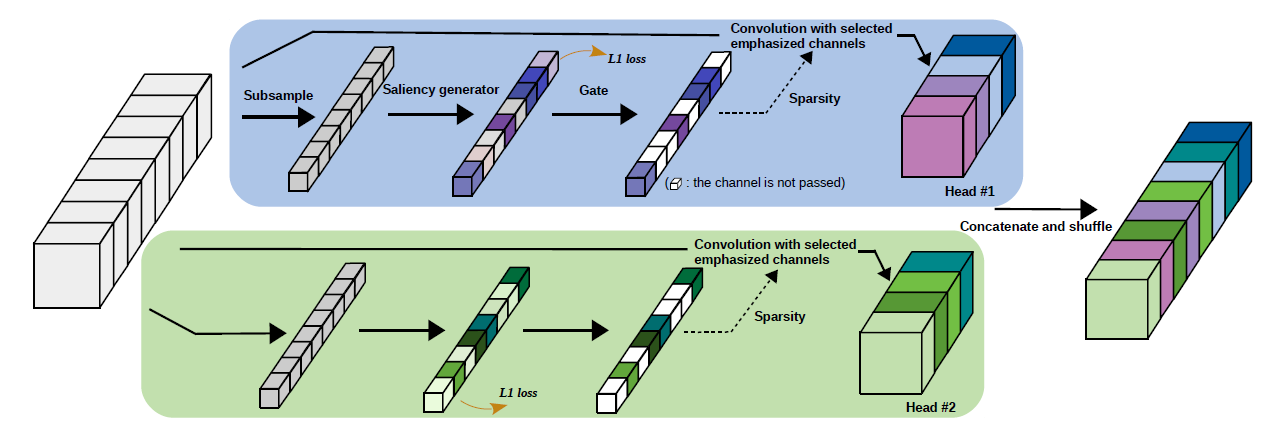

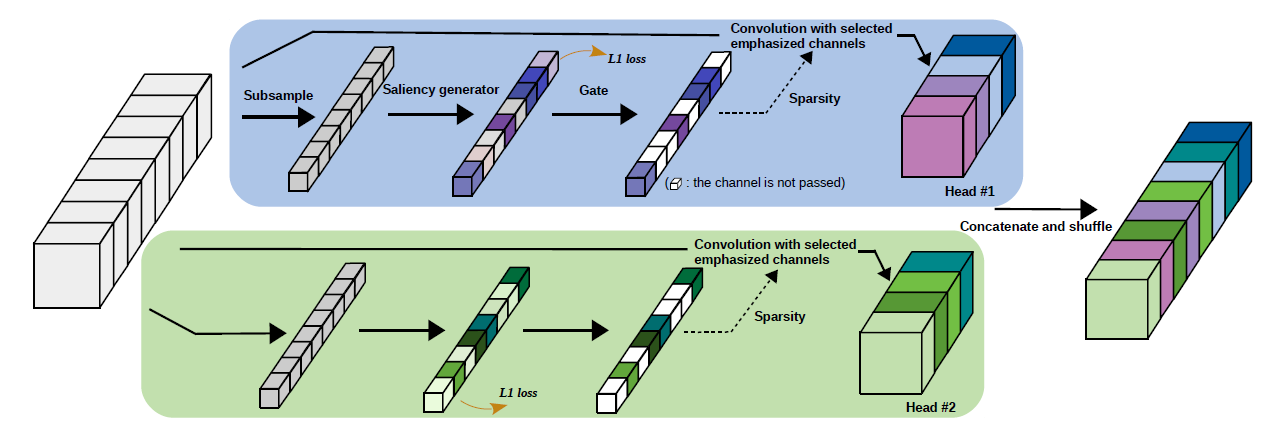

Figure 1: Overview of a DGC layer.

The DGC network can be trained from scratch by an

end-to-end manner, without the need of model pre-training. During backward

propagation in a DGC layer, gradients are calculated

only for weights connected to selected channels during the forward pass, and

safely set as 0 for others thanks to the unbiased gating strategy (refer to the paper).

To avoid abrupt changes in training loss while pruning,

we gradually deactivate input channels along the training process

with a cosine shape learning rate.