Polygons

+ Contrast

+ Sharpen

+ Pad

+ Perspective

| Image | Heatmaps | Seg. Maps | Keypoints | Bounding Boxes, Polygons |

|

|---|---|---|---|---|---|

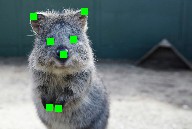

| Original Input |  |

|

|

|

|

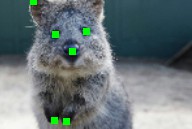

| Gauss. Noise + Contrast + Sharpen |

|

|

|

|

|

| Affine |  |

|

|

|

|

| Crop + Pad |

|

|

|

|

|

| Fliplr + Perspective |

|

|

|

|

|

| meta | ||||

| Noop | ChannelShuffle | |||

|

|

|||

| arithmetic | ||||

| Add | Add (per_channel=True) |

AdditiveGaussianNoise | AdditiveGaussianNoise (per_channel=True) |

AdditiveLaplaceNoise |

|

|

|

|

|

| AdditiveLaplaceNoise (per_channel=True) |

AdditivePoissonNoise | AdditivePoissonNoise (per_channel=True) |

Multiply | Multiply (per_channel=True) |

|

|

|

|

|

| Dropout | Dropout (per_channel=True) |

CoarseDropout (p=0.2) |

CoarseDropout (p=0.2, per_channel=True) |

ImpulseNoise |

|

|

|

|

|

| SaltAndPepper | Salt | Pepper | CoarseSaltAndPepper (p=0.2) |

CoarseSalt (p=0.2) |

|

|

|

|

|

| CoarsePepper (p=0.2) |

Invert | Invert (per_channel=True) |

JpegCompression | |

|

|

|

|

|

| blend | ||||

| Alpha with EdgeDetect(1.0) |

Alpha with EdgeDetect(1.0) (per_channel=True) |

SimplexNoiseAlpha with EdgeDetect(1.0) |

FrequencyNoiseAlpha with EdgeDetect(1.0) |

|

|

|

|

|

|

| blur | ||||

| GaussianBlur | AverageBlur | MedianBlur | BilateralBlur (sigma_color=250, sigma_space=250) |

MotionBlur (angle=0) |

|

|

|

|

|

| MotionBlur (k=5) |

||||

|

||||

| color | ||||

| MultiplyHueAndSaturation | MultiplyHue | MultiplySaturation | AddToHueAndSaturation | AddToHue |

|

|

|

|

|

| AddToSaturation | Grayscale | KMeansColorQuantization (to_colorspace=RGB) |

UniformColorQuantization (to_colorspace=RGB) |

|

|

|

|

|

|

| contrast | ||||

| GammaContrast | GammaContrast (per_channel=True) |

SigmoidContrast (cutoff=0.5) |

SigmoidContrast (gain=10) |

SigmoidContrast (per_channel=True) |

|

|

|

|

|

| LogContrast | LogContrast (per_channel=True) |

LinearContrast | LinearContrast (per_channel=True) |

AllChannels- HistogramEqualization |

|

|

|

|

|

| HistogramEqualization | AllChannelsCLAHE | AllChannelsCLAHE (per_channel=True) |

CLAHE | |

|

|

|

|

|

| convolutional | ||||

| Sharpen (alpha=1) |

Emboss (alpha=1) |

EdgeDetect | DirectedEdgeDetect (alpha=1) |

|

|

|

|

|

|

| edges | ||||

| Canny | ||||

|

||||

| flip | ||||

| Fliplr | Flipud | |||

|

|

|||

| geometric | ||||

| Affine | Affine: Modes | |||

|

|

|||

| Affine: cval | PiecewiseAffine | |||

|

|

|||

| PerspectiveTransform | ElasticTransformation (sigma=0.2) |

|||

|

|

|||

| ElasticTransformation (sigma=5.0) |

Rot90 | |||

|

|

|||

| pooling | ||||

| AveragePooling | MaxPooling | MinPooling | MedianPooling | |

|

|

|

|

|

| segmentation | ||||

| Superpixels (p_replace=1) |

Superpixels (n_segments=100) |

UniformVoronoi | RegularGridVoronoi: rows/cols (p_drop_points=0) |

RegularGridVoronoi: p_drop_points (n_rows=n_cols=30) |

|

|

|

||

| RegularGridVoronoi: p_replace (n_rows=n_cols=16) |

||||

|

||||

| size | ||||

| CropAndPad | Crop | |||

|

|

|||

| Pad | PadToFixedSize (height'=height+32, width'=width+32) |

|||

|

|

|||

| CropToFixedSize (height'=height-32, width'=width-32) |

||||

|

||||

| weather | ||||

| FastSnowyLandscape (lightness_multiplier=2.0) |

Clouds | Fog | Snowflakes | |

|

|

|

|

|