在openeuler 2203sp1容器内启动group_replication,greatsql就崩溃重启

一、缺陷信息

内核信息:

虚拟机:Linux 172-16-6-178 5.10.0-136.12.0.86.openeuler2203sp1.x86_64 #1 SMP Tue Dec 27 17:50:15 CST 2022 x86_64 x86_64 x86_64 GNU/Linux

容器:Linux 172-16-6-178 5.10.0-136.12.0.86.openeuler2203sp1.x86_64 #1 SMP Tue Dec 27 17:50:15 CST 2022 x86_64 x86_64 x86_64 GNU/Linux

缺陷归属组件:

openeuler2203sp1、GreatSQL8.0.32-25

缺陷归属的版本:

openeuler2203sp1

8.0.32-25 GreatSQL (GPL), Release 25, Revision db07cc5cb73

缺陷简述:

greatsql数据库安装在华为欧拉2203sp1.x86_64虚拟机里面的docker容器内,容器的系统也是华为欧拉2203sp1.x86_64:

一共部署了三台虚拟机,每台虚拟机的欧拉容器内的etc/hosts都已经加了解析:

172.16.6.178 172-16-6-178

172.16.6.140 172-16-6-140

172.16.6.169 172-16-6-169

数据库my.cnf的配置文件内容如下:

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

slow_query_log = ON

long_query_time = 1

log_slow_verbosity = FULL

log_error_verbosity = 3

max_connections = 12000

innodb_buffer_pool_size = 3G

innodb_log_file_size = 1G

innodb_file_per_table = 1

innodb_flush_method = O_DIRECT

tmp_table_size = 32M

max_heap_table_size = 32M

thread_cache_size = 200

table_open_cache = 20

open_files_limit = 65535

sql-mode = NO_ENGINE_SUBSTITUTION

binlog-format=row

binlog_checksum=CRC32

binlog_transaction_dependency_tracking=writeset

enforce-gtid-consistency=true

gtid-mode=on

log-bin=/var/lib/mysql/mysql-bin

log_slave_updates=ON

loose-greatdb_ha_enable_mgr_vip=1

loose-greatdb_ha_mgr_vip_ip=172.16.6.1

loose-greatdb_ha_mgr_vip_mask='255.255.0.0'

loose-greatdb_ha_mgr_vip_nic='ens3'

loose-greatdb_ha_send_arp_package_times=5

loose-group-replication-ip-whitelist="172.16.6.178,172.16.6.140,172.16.6.169"

loose-group_replication_bootstrap_group=off

loose-group_replication_exit_state_action=READ_ONLY

loose-group_replication_flow_control_mode="DISABLED"

loose-group_replication_group_name="1b6241bb-77b7-40e2-9157-fa33a4b2df89"

loose-group_replication_group_seeds="172.16.6.178:33061,172.16.6.140:33061,172.16.6.169:33061"

loose-group_replication_local_address="172.16.6.178:33061"

loose-group_replication_single_primary_mode=ON

loose-group_replication_start_on_boot=off

loose-plugin_load_add='greatdb_ha.so'

loose-plugin_load_add='group_replication.so'

loose-plugin_load_add='mysql_clone.so'

master-info-repository=TABLE

relay-log-info-repository=TABLE

relay_log_recovery=on

server_id=1

slave_checkpoint_period=2

slave_parallel_type=LOGICAL_CLOCK

slave_parallel_workers=128

slave_preserve_commit_order=1

sql_require_primary_key=1

transaction_write_set_extraction=XXHASH64

每个节点也配置了集群账号:

create user repl@'%' identified with mysql_native_password by 'Ch345@123';

grant replication slave, backup_admin on . to 'repl'@'%';

change master to master_user='repl', master_password='Ch345' for channel 'group_replication_recovery';

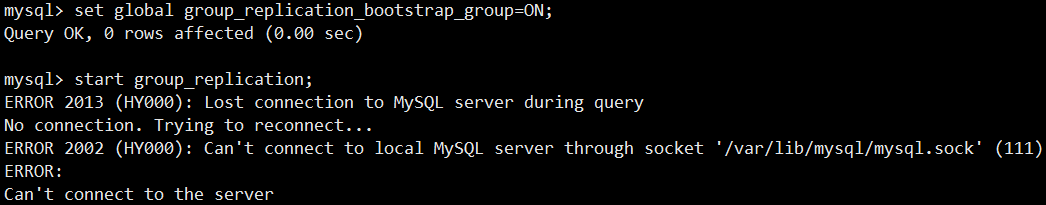

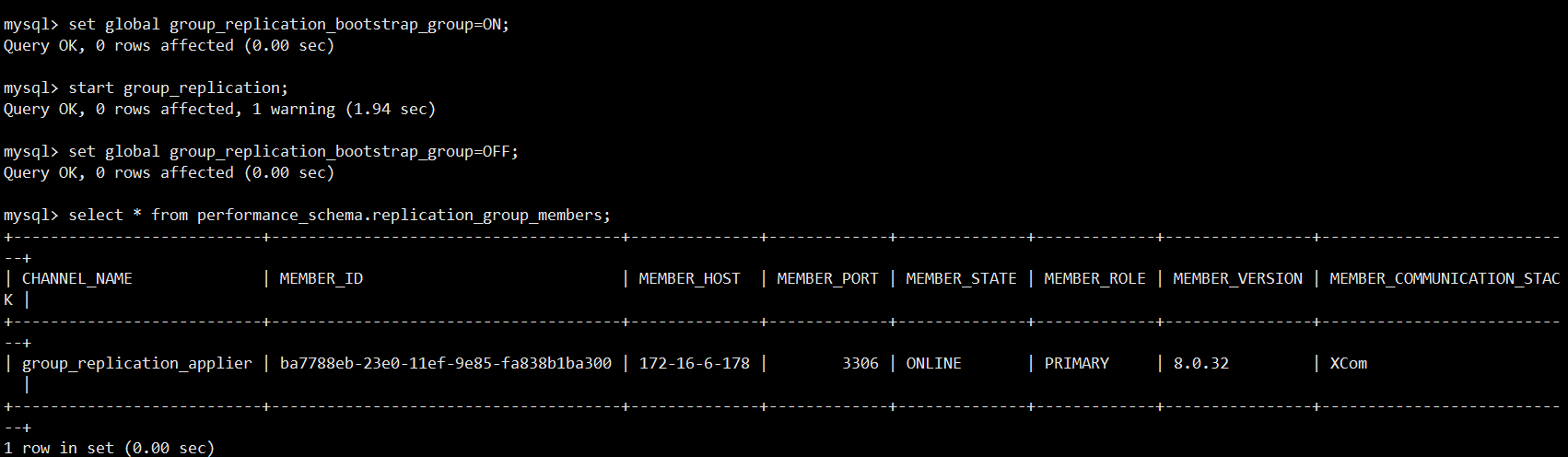

在主控节点启用集群执行到第二句就报错:

set global group_replication_bootstrap_group=ON;

start group_replication;

查看数据库的日志,日志中显示数据库遇到了致命错误,已崩溃:

2024-06-06T08:46:34.281353Z 154 [Note] [MY-011071] [Repl] Plugin group_replication reported: 'build_donor_list is called'

2024-06-06T08:46:34.281380Z 154 [Note] [MY-011071] [Repl] Plugin group_replication reported: 'build_donor_list is called over, size:0'

2024-06-06T08:46:34.281413Z 0 [Note] [MY-011071] [Repl] Plugin group_replication reported: 'handle_leader_election_if_needed is activated,suggested_primary:'

2024-06-06T08:46:34.281445Z 0 [System] [MY-011565] [Repl] Plugin group_replication reported: 'Setting super_read_only=ON.'

2024-06-06T08:46:34.281458Z 0 [System] [MY-011503] [Repl] Plugin group_replication reported: 'Group membership changed to 172-16-6-178:3306 on view 17176635942809729:1.'

2024-06-06T08:46:34.281475Z 154 [Note] [MY-011623] [Repl] Plugin group_replication reported: 'Only one server alive. Declaring this server as online within the replication group'

2024-06-06T08:46:34.282537Z 21 [Note] [MY-011071] [Repl] Plugin group_replication reported: 'continue to process queue after suspended'

2024-06-06T08:46:34.282569Z 21 [Note] [MY-011071] [Repl] Plugin group_replication reported: 'before getting certification info in log_view_change_event_in_order'

2024-06-06T08:46:34.282581Z 21 [Note] [MY-011071] [Repl] Plugin group_replication reported: 'after setting certification info in log_view_change_event_in_order'

2024-06-06T08:46:34.306474Z 0 [System] [MY-011490] [Repl] Plugin group_replication reported: 'This server was declared online within the replication group.'

2024-06-06T08:46:34.306595Z 0 [Note] [MY-013519] [Repl] Plugin group_replication reported: 'Elected primary member gtid_executed: 1b6241bb-77b7-40e2-9157-fa33a4b2df89:1'

2024-06-06T08:46:34.306612Z 0 [Note] [MY-013519] [Repl] Plugin group_replication reported: 'Elected primary member applier channel received_transaction_set: 1b6241bb-77b7-40e2-9157-fa33a4b2df89:1'

2024-06-06T08:46:34.306620Z 0 [System] [MY-011507] [Repl] Plugin group_replication reported: 'A new primary with address 172-16-6-178:3306 was elected. The new primary will execute all previous group transactions before allowing writes.'

2024-06-06T08:46:34Z UTC - mysqld got signal 6 ;

Most likely, you have hit a bug, but this error can also be caused by malfunctioning hardware.

BuildID[sha1]=fa0a5c40a97c29e73be6d445cdc39dbd25c6e45c

Build ID: fa0a5c40a97c29e73be6d445cdc39dbd25c6e45c

Server Version: 8.0.32-25 GreatSQL (GPL), Release 25, Revision db07cc5cb73

Thread pointer: 0x0

Attempting backtrace. You can use the following information to find out

where mysqld died. If you see no messages after this, something went

terribly wrong...

stack_bottom = 0 thread_stack 0x100000

2024-06-06T08:46:34.308180Z 155 [System] [MY-011565] [Repl] Plugin group_replication reported: 'Setting super_read_only=ON.'

/usr/sbin/mysqld(my_print_stacktrace(unsigned char const*, unsigned long)+0x3d) [0x235bfcd]

/usr/sbin/mysqld(print_fatal_signal(int)+0x3e3) [0x136ca03]

/usr/sbin/mysqld(handle_fatal_signal+0xc5) [0x136cad5]

/usr/lib64/libc.so.6(+0x40de0) [0x7fb59b7cfde0]

/usr/lib64/libc.so.6(+0x8ceef) [0x7fb59b81beef]

/usr/lib64/libc.so.6(raise+0x16) [0x7fb59b7cfd36]

/usr/lib64/libc.so.6(abort+0xd7) [0x7fb59b7bb177]

/usr/sbin/mysqld() [0xe905f2]

/usr/lib64/mysql/plugin/greatdb_ha.so(+0xe7e5) [0x7fb58c2617e5]

/usr/lib64/mysql/plugin/greatdb_ha.so(+0x141eb) [0x7fb58c2671eb]

/usr/lib64/mysql/plugin/greatdb_ha.so(+0x16269) [0x7fb58c269269]

/usr/lib64/mysql/plugin/greatdb_ha.so(+0x17d41) [0x7fb58c26ad41]

/usr/lib64/libc.so.6(+0x8b31a) [0x7fb59b81a31a]

/usr/lib64/libc.so.6(+0x10da80) [0x7fb59b89ca80]

Please help us make Percona Server better by reporting any

bugs at https://bugs.percona.com/

You may download the Percona Server operations manual by visiting

http://www.percona.com/software/percona-server/. You may find information

in the manual which will help you identify the cause of the crash.

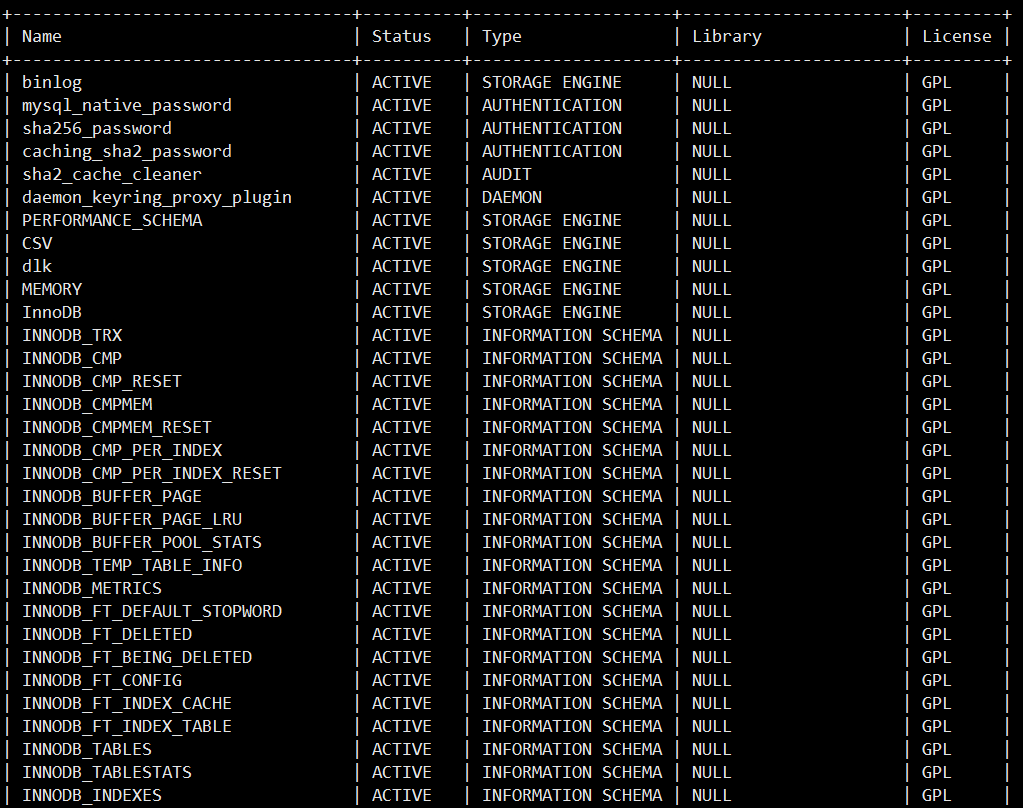

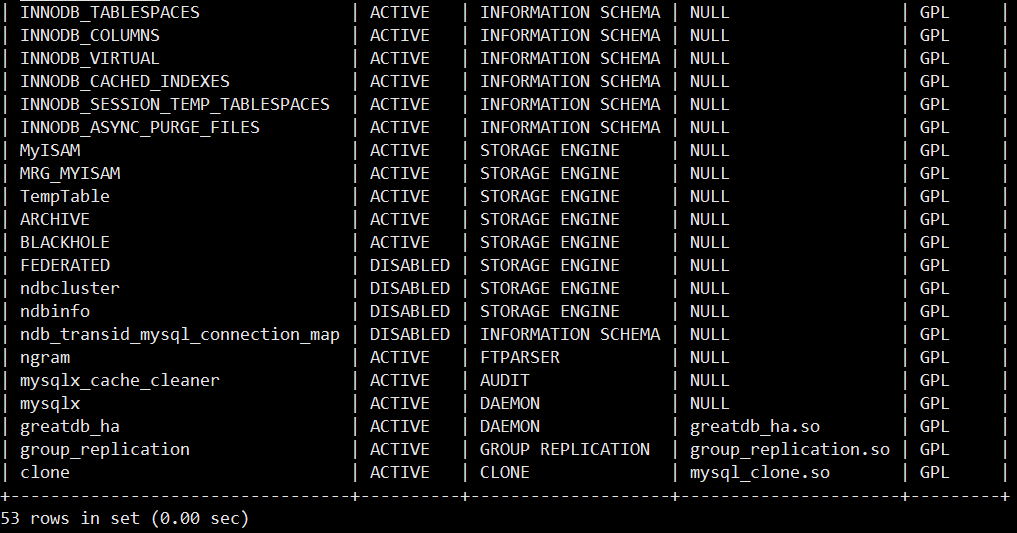

查看插件的状态都是正常:show plugins;

greatsql官方维护人员回复说是VIP功能导致的崩溃,我尝试禁用掉viploose-greatdb_ha_enable_mgr_vip=0 后集群可以开启

在容器里面setcap加上NET_ADMIN的权限就能设vip,我们在centos7.9容器里面是可以正常设置vip并开启集群的,在欧拉2203sp1里面就不行,但是我们现在因国产化替代,需要在欧拉容器中使用到集群vip,有没有大佬知道该如何在欧拉容器中开启greatsql集群vip又规避这个问题?

【环境信息】

硬件信息

启动欧拉容器的命令:

docker run -itd --cap-add NET_ADMIN --privileged --name cloudweb --net host -e DISPLAY=:0 -e QT_X11_NO_MITSHM=1 -v /dev:/dev -v /sys:/sys -v /tmp/vpc:/tmp -v /run/vpc:/run -v /etc/shadow:/etc/shadow.docker -v /lib/modules:/lib/modules -v /opt/update:/opt/update -v /etc/sysconfig/network-scripts:/etc/sysconfig/network-scripts -v /etc/hostname:/etc/hostname -v /etc/hosts:/etc/hosts

软件信息

openeuler2203sp1

8.0.32-25 GreatSQL (GPL), Release 25, Revision db07cc5cb73

网络信息

容器与主机共享网络

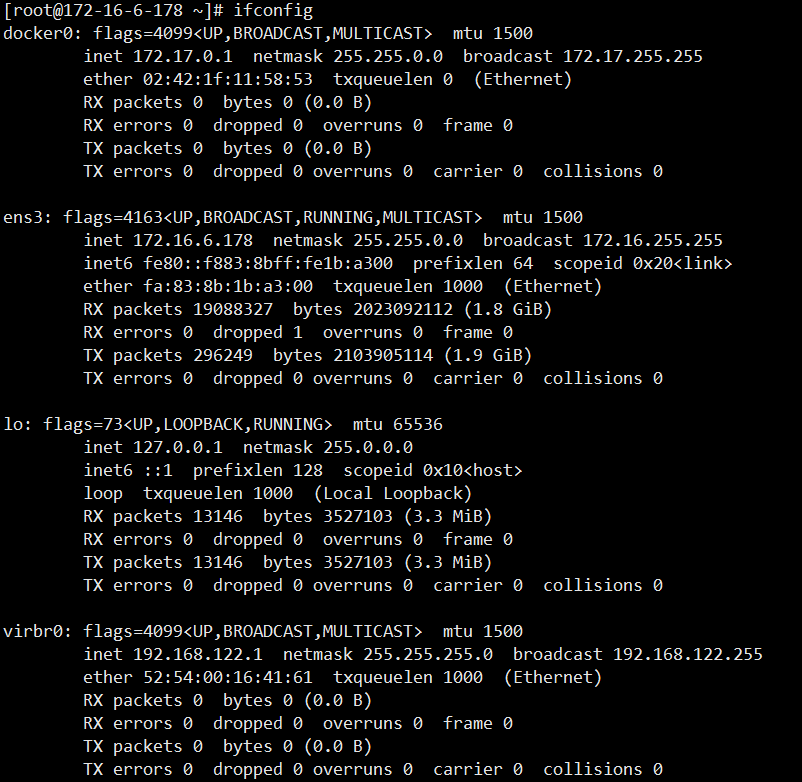

容器的网络信息:

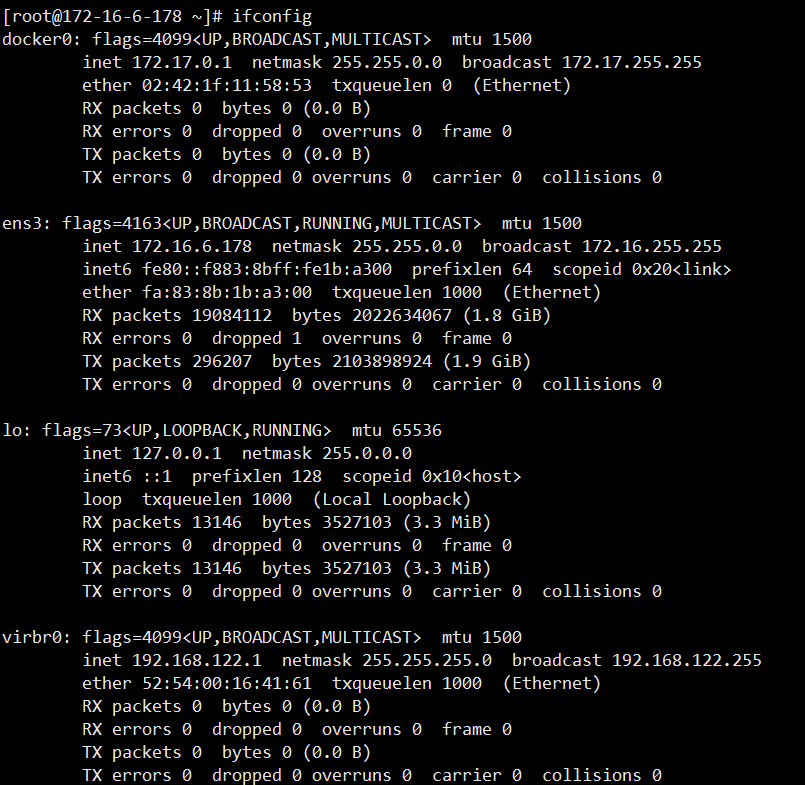

虚拟机的网络信息:

【问题复现步骤】

见上文

【实际结果】

无法在openeuler 2203sp1容器内正常启动greatsql MGR集群

【其他相关附件信息】

见上文

缺陷详情参考链接:

greatsql维护人员的回复:https://gitee.com/GreatSQL/GreatSQL/issues/I9VTF8?from=project-issue

缺陷分析指导链接:

Hi winstonfy, welcome to the openEuler Community.

I'm the Bot here serving you. You can find the instructions on how to interact with me at Here.

If you have any questions, please contact the SIG: TC, and any of the maintainers: @relue0z , @stonefly128 , @Fengguang , @木得感情的openEuler机器人 , @hjimmy , @myeuler , @Jianmin , @solarhu , @Charlie_Li , @ZhiGang , @Xie XiuQi , @陈棋德 , @Wayne Ren , @cf-zhao , @juntian , @Lvcongqing , @zhujianwei001 , @虫儿飞 , @George.Cao

此处可能存在不合适展示的内容,页面不予展示。您可通过相关编辑功能自查并修改。

如您确认内容无涉及 不当用语 / 纯广告导流 / 暴力 / 低俗色情 / 侵权 / 盗版 / 虚假 / 无价值内容或违法国家有关法律法规的内容,可点击提交进行申诉,我们将尽快为您处理。

登录 后才可以发表评论