I am attempting to train a simple MNIST Classifier on MindSpore NPU. The following code works on CPU but I get a cryptic error when trying NPU.

The error is raised when the input is passed to the model. I thought it may be related to the input (img) shape, however trying to print anything results in a "Sync stream error"

class MNISTClassifier(ms.nn.Cell):

def __init__(self):

super(MNISTClassifier, self).__init__()

self.dense_1 = ms.nn.Dense(28 * 28, 28 * 28)

self.dense_1_act = ms.ops.ReLU()

self.dense_2 = ms.nn.Dense(28 * 28, 10)

self.dense_2_act = ms.ops.Sigmoid()

def construct(self, x):

return self.dense_2_act(self.dense_2(self.dense_1_act(self.dense_1(x))))

class TrainOneStepCell(ms.nn.Cell):

def __init__(self, network, optimizer, sens=1.0):

super(TrainOneStepCell, self).__init__(auto_prefix=False)

self.network = network

self.weights = ParameterTuple(network.trainable_params())

self.optimizer = optimizer

self.grad = C.GradOperation(get_by_list=True, sens_param=True)

self.sens = sens

def set_sens(self, value):

self.sens = value

def construct(self, data, label):

weights = self.weights

loss = self.network(data, label)

sens = P.Fill()(P.DType()(loss), P.Shape()(loss), self.sens)

grads = self.grad(self.network, weights)(data, label, sens)

return F.depend(loss, self.optimizer(grads))

mnist_dataset = ds.MnistDataset(dataset_dir="data/mnist")

epochs = 3

lr = 1e-3

loss_fn = ms.nn.MSELoss()

model = MNISTClassifier()

optimizer = ms.nn.Adam(model.trainable_params(), learning_rate=lr)

def train_loop(model, dataset):

model = WithLossCell(model, loss_fn)

model = TrainOneStepCell(model, optimizer)

model.set_train()

for dt in tqdm(dataset.create_tuple_iterator()):

img, label = dt

label = int(label.asnumpy())

img /= 255.0

img = img.astype(ms.dtype.float32)

img = ops.expand_dims(img, 0)

img = ops.flatten(img)

out_label = np.zeros(10, np.uint8)

out_label[label] = 1

out_label = ms.Tensor(out_label)

out_label = ops.expand_dims(out_label, 0)

# print(img)

loss = model(img, out_label)

train_loop(model, mnist_dataset)

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

/tmp/ipykernel_2078262/1333301569.py in <module>

30

31

---> 32 train_loop(model, mnist_dataset)

/tmp/ipykernel_2078262/1333301569.py in train_loop(model, dataset)

27

28 # print(img)

---> 29 loss = model(img, out_label)

30

31

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/nn/cell.py in __call__(self, *args, **kwargs)

642 except Exception as err:

643 _pynative_executor.clear_res()

--> 644 raise err

645

646 if isinstance(output, Parameter):

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/nn/cell.py in __call__(self, *args, **kwargs)

638 _pynative_executor.new_graph(self, *args, **kwargs)

639 cast_inputs = self.auto_cast_inputs(args)

--> 640 output = self._run_construct(cast_inputs, kwargs)

641 _pynative_executor.end_graph(self, output, *args, **kwargs)

642 except Exception as err:

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/nn/cell.py in _run_construct(self, cast_inputs, kwargs)

423 output = self._shard_fn(*cast_inputs, **kwargs)

424 else:

--> 425 output = self.construct(*cast_inputs, **kwargs)

426 if self._enable_forward_hook:

427 output = self._run_forward_hook(cast_inputs, output)

~/work/mindspore_test/mnist_model.py in construct(self, data, label)

39 sens = P.Fill()(P.DType()(loss), P.Shape()(loss), self.sens)

40 grads = self.grad(self.network, weights)(data, label, sens)

---> 41 return F.depend(loss, self.optimizer(grads))

42

43

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/ops/composite/base.py in after_grad(*args, **kwargs)

394 if self.get_by_list:

395 def after_grad(*args, **kwargs):

--> 396 return grad_(fn, weights)(*args, **kwargs)

397 else:

398 def after_grad(*args, **kwargs):

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/common/api.py in wrapper(*arg, **kwargs)

96 @wraps(fn)

97 def wrapper(*arg, **kwargs):

---> 98 results = fn(*arg, **kwargs)

99 return _convert_python_data(results)

100

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/ops/composite/base.py in after_grad(*args, **kwargs)

384 @_wrap_func

385 def after_grad(*args, **kwargs):

--> 386 self._pynative_forward_run(fn, grad_, args, kwargs)

387 _pynative_executor.grad(fn, grad_, weights, self.grad_position, *args, **kwargs)

388 out = _pynative_executor(fn, grad_.sens_param, *args, **kwargs)

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/ops/composite/base.py in _pynative_forward_run(self, fn, grad, args, kwargs)

423 if not _pynative_executor.check_run(grad, fn, self.weights_id, *args, **new_kwargs):

424 fn.set_grad()

--> 425 fn(*args, **new_kwargs)

426 fn.set_grad(False)

427

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/nn/cell.py in __call__(self, *args, **kwargs)

642 except Exception as err:

643 _pynative_executor.clear_res()

--> 644 raise err

645

646 if isinstance(output, Parameter):

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/nn/cell.py in __call__(self, *args, **kwargs)

638 _pynative_executor.new_graph(self, *args, **kwargs)

639 cast_inputs = self.auto_cast_inputs(args)

--> 640 output = self._run_construct(cast_inputs, kwargs)

641 _pynative_executor.end_graph(self, output, *args, **kwargs)

642 except Exception as err:

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/nn/cell.py in _run_construct(self, cast_inputs, kwargs)

423 output = self._shard_fn(*cast_inputs, **kwargs)

424 else:

--> 425 output = self.construct(*cast_inputs, **kwargs)

426 if self._enable_forward_hook:

427 output = self._run_forward_hook(cast_inputs, output)

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/nn/wrap/cell_wrapper.py in construct(self, data, label)

116 def construct(self, data, label):

117 out = self._backbone(data)

--> 118 return self._loss_fn(out, label)

119

120 @property

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/nn/cell.py in __call__(self, *args, **kwargs)

642 except Exception as err:

643 _pynative_executor.clear_res()

--> 644 raise err

645

646 if isinstance(output, Parameter):

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/nn/cell.py in __call__(self, *args, **kwargs)

638 _pynative_executor.new_graph(self, *args, **kwargs)

639 cast_inputs = self.auto_cast_inputs(args)

--> 640 output = self._run_construct(cast_inputs, kwargs)

641 _pynative_executor.end_graph(self, output, *args, **kwargs)

642 except Exception as err:

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/nn/cell.py in _run_construct(self, cast_inputs, kwargs)

423 output = self._shard_fn(*cast_inputs, **kwargs)

424 else:

--> 425 output = self.construct(*cast_inputs, **kwargs)

426 if self._enable_forward_hook:

427 output = self._run_forward_hook(cast_inputs, output)

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/nn/loss/loss.py in construct(self, logits, labels)

317 _check_is_tensor('labels', labels, self.cls_name)

318 x = F.square(logits - labels)

--> 319 return self.get_loss(x)

320

321

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/nn/loss/loss.py in get_loss(self, x, weights)

150 x = self.cast(x, mstype.float32)

151 weights = self.cast(weights, mstype.float32)

--> 152 x = self.mul(weights, x)

153 if self.reduce and self.average:

154 x = self.reduce_mean(x, self.get_axis(x))

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/ops/primitive.py in __call__(self, *args)

294 if should_elim:

295 return output

--> 296 return _run_op(self, self.name, args)

297

298 def __getstate__(self):

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/common/api.py in wrapper(*arg, **kwargs)

96 @wraps(fn)

97 def wrapper(*arg, **kwargs):

---> 98 results = fn(*arg, **kwargs)

99 return _convert_python_data(results)

100

~/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/ops/primitive.py in _run_op(obj, op_name, args)

731 def _run_op(obj, op_name, args):

732 """Single op execution function supported by ge in PyNative mode."""

--> 733 output = real_run_op(obj, op_name, args)

734 return output

RuntimeError: Single op compile failed, op: mat_mul_11249821765871562993_0

except_msg: 2024-04-15 22:21:23.709597+00:00: Query except_msg:Traceback (most recent call last):

File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/te_fusion/parallel_compilation.py", line 1643, in run

optional_input_mode=self._optional_input_mode)

File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/te_fusion/fusion_manager.py", line 1337, in build_single_op

compile_info = call_op()

File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/te_fusion/fusion_manager.py", line 1324, in call_op

opfunc(*inputs, *outputs, *new_attrs, **kwargs)

File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/tbe/common/utils/para_check.py", line 547, in _in_wrapper

return func(*args, **kwargs)

File "/usr/local/Ascend/ascend-toolkit/latest/opp/built-in/op_impl/ai_core/tbe/impl/mat_mul.py", line 257, in mat_mul

gemm_impl(input_x1, input_x2, output_y, attrs, mode="static")

File "/usr/local/Ascend/ascend-toolkit/latest/opp/built-in/op_impl/ai_core/tbe/impl/util/util_gemm.py", line 661, in gemm_impl

result = gemm_compute(tensor_a, tensor_b, output_y, para_dict, mode)

File "/usr/local/Ascend/ascend-toolkit/latest/opp/built-in/op_impl/ai_core/tbe/impl/util/util_gemm.py", line 374, in gemm_compute

return tbe.gemm(tensor_a=input_a, tensor_b=input_b, para_dict=para_dict)

File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/tbe/dsl/api.py", line 1420, in gemm

return gemm_compute.gemm(tensor_a, tensor_b, para_dict)

File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/tbe/common/utils/para_check.py", line 994, in in_wrapper

return func(*args, **kwargs)

File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/tbe/dsl/compute/gemm_compute.py", line 562, in gemm

result = gemm_integrated(tensor_a, tensor_b, para_dict)

File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/tbe/common/utils/para_check.py", line 994, in in_wrapper

return func(*args, **kwargs)

File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/tbe/dsl/compute/gemm_integrated_compute.py", line 76, in gemm

result = gemm_compute.compute()

File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/tbe/dsl/compute/gemm_integrated_compute.py", line 301, in compute

self._preprocess()

File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/tbe/dsl/compute/gemm_integrated_compute.py", line 319, in _preprocess

self._check_format()

File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/tbe/dsl/compute/gemm_integrated_compute.py", line 491, in _check_format

error_manager_cube.raise_err_specific(self.op_type, reason)

File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/tbe/common/utils/errormgr/error_manager_cube.py", line 229, in raise_err_specific

raise_runtime_error_cube(args_dict, msg)

File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/tbe/common/utils/errormgr/error_manager_util.py", line 69, in raise_runtime_error_cube

raise RuntimeError(args_dict, *msgs)

RuntimeError:

({'errCode': 'E60108', 'op_name': 'MatMulV2', 'reason': "The supported format_b list is ['FRACTAL_Z', 'FRACTAL_NZ', 'FRACTAL_ZN_RNN'], while the current format_b is ND."}, "In op[MatMulV2], [The supported format_b list is ['FRACTAL_Z', 'FRACTAL_NZ', 'FRACTAL_ZN_RNN'], while the current format_b is ND.]")

----------------------------------------------------

- C++ Call Stack: (For framework developers)

----------------------------------------------------

mindspore/ccsrc/plugin/device/ascend/kernel/tbe/tbe_kernel_compile.cc:471 QueryProcess

Please assign maintainer to check this issue.

请为此issue分配处理人。

@fangwenyi @chengxiaoli @Shawny

此处可能存在不合适展示的内容,页面不予展示。您可通过相关编辑功能自查并修改。

如您确认内容无涉及 不当用语 / 纯广告导流 / 暴力 / 低俗色情 / 侵权 / 盗版 / 虚假 / 无价值内容或违法国家有关法律法规的内容,可点击提交进行申诉,我们将尽快为您处理。

感谢您的提问,您可以评论//mindspore-assistant更快获取帮助:

麻烦提供以下信息以便进一步定位:

import mindspore;mindspore.set_context(device_target='Ascend');mindspore.run_check()

MindSpore version: 1.10.0

The result of multiplication calculation is correct, MindSpore has been installed successfully!

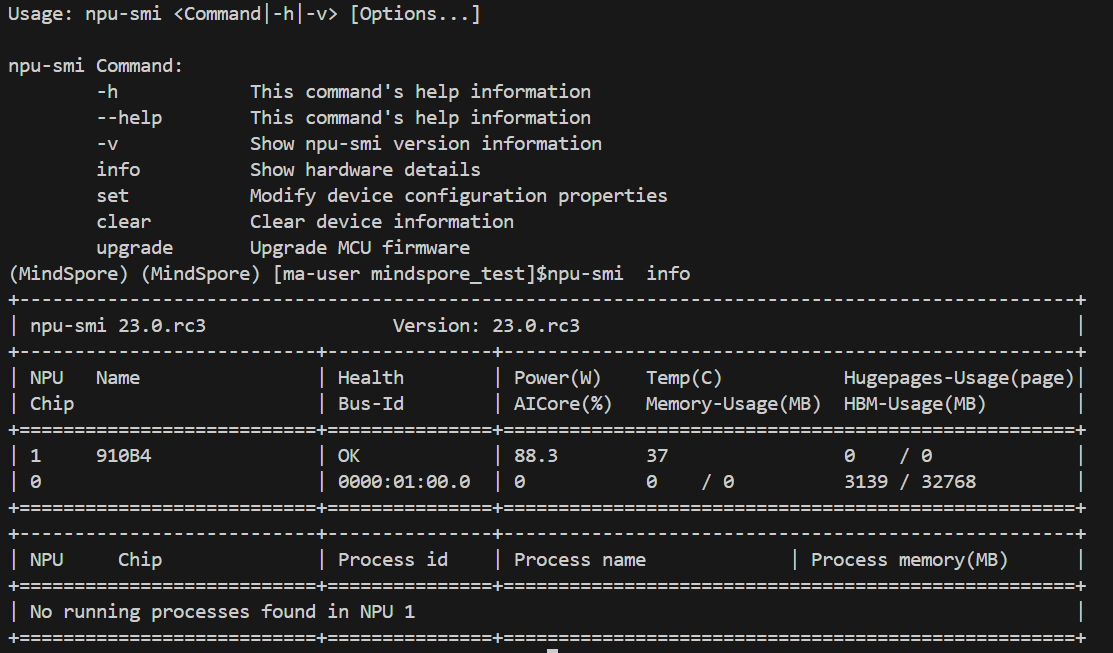

npu-smi info screenshot

Trying to run these examples: https://www.mindspore.cn/doc/programming_guide/en/r1.1/train.html?highlight=training also results in cryptic errors

[WARNING] ME(4079008:281473665092160,MainProcess):2024-04-19-06:05:47.391.091 [mindspore/dataset/core/validator_helpers.py:806] 'Resize' from mindspore.dataset.vision.c_transforms is deprecated from version 1.8 and will be removed in a future version. Use 'Resize' from mindspore.dataset.vision instead.

[WARNING] ME(4079008:281473665092160,MainProcess):2024-04-19-06:05:47.391.429 [mindspore/dataset/core/validator_helpers.py:806] 'Rescale' from mindspore.dataset.vision.c_transforms is deprecated from version 1.8 and will be removed in a future version. Use 'Rescale' from mindspore.dataset.vision instead.

[WARNING] ME(4079008:281473665092160,MainProcess):2024-04-19-06:05:47.391.651 [mindspore/dataset/core/validator_helpers.py:806] 'Rescale' from mindspore.dataset.vision.c_transforms is deprecated from version 1.8 and will be removed in a future version. Use 'Rescale' from mindspore.dataset.vision instead.

[WARNING] ME(4079008:281473665092160,MainProcess):2024-04-19-06:05:47.391.832 [mindspore/dataset/core/validator_helpers.py:806] 'HWC2CHW' from mindspore.dataset.vision.c_transforms is deprecated from version 1.8 and will be removed in a future version. Use 'HWC2CHW' from mindspore.dataset.vision instead.

[WARNING] ME(4079008:281473665092160,MainProcess):2024-04-19-06:05:47.391.931 [mindspore/dataset/core/validator_helpers.py:806] 'TypeCast' from mindspore.dataset.transforms.c_transforms is deprecated from version 1.8 and will be removed in a future version. Use 'TypeCast' from mindspore.dataset.transforms instead.

============== Starting Training ==============

[ERROR] DEVICE(4079008,ffffb1d24a40,python):2024-04-19-06:06:07.391.319 [mindspore/ccsrc/plugin/device/ascend/hal/device/ascend_kernel_runtime.cc:675] TaskFailCallback] Execute TaskFailCallback failed. task_fail_info or current_graph_ is nullptr

[ERROR] GE(4079008,ffffb1d24a40,python):2024-04-19-06:06:07.394.552 [mindspore/ccsrc/plugin/device/ascend/hal/device/ge_runtime/runtime_model.cc:250] RtModelUnbindStream] Unbind stream from model failed! Index: 0

Traceback (most recent call last):

File "mnist_datasink.py", line 125, in <module>

model.train(epoch=10, train_dataset=ds_train, callbacks=[LossMonitor()], dataset_sink_mode=True, sink_size=1000)

File "/home/ma-user/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/train/model.py", line 1051, in train

initial_epoch=initial_epoch)

File "/home/ma-user/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/train/model.py", line 98, in wrapper

func(self, *args, **kwargs)

File "/home/ma-user/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/train/model.py", line 625, in _train

cb_params, sink_size, initial_epoch, valid_infos)

File "/home/ma-user/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/train/model.py", line 684, in _train_dataset_sink_process

dataset_helper=dataset_helper)

File "/home/ma-user/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/train/model.py", line 442, in _exec_preprocess

dataset_helper = DatasetHelper(dataset, dataset_sink_mode, sink_size, epoch_num)

File "/home/ma-user/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/train/dataset_helper.py", line 350, in __init__

self.iter = iterclass(dataset, sink_size, epoch_num)

File "/home/ma-user/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/train/dataset_helper.py", line 568, in __init__

super().__init__(dataset, sink_size, epoch_num)

File "/home/ma-user/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/train/dataset_helper.py", line 466, in __init__

create_data_info_queue=create_data_info_queue)

File "/home/ma-user/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/train/_utils.py", line 74, in _exec_datagraph

phase=phase)

File "/home/ma-user/anaconda3/envs/MindSpore/lib/python3.7/site-packages/mindspore/common/api.py", line 1047, in init_dataset

need_run=need_run):

RuntimeError: Preprocess failed before run graph 0.

----------------------------------------------------

- Ascend Error Message:

----------------------------------------------------

EE9999: Inner Error, Please contact support engineer!

EE9999 Stream bind failed, stream_id=3, model_id=0, default stream sync error, retCode=0x7150004![FUNC:BindStream][FILE:model.cc][LINE:335]

TraceBack (most recent call last):

Bind stream failed, model_id=0, stream_id=3, retCode=0x7150004.[FUNC:ModelBindStream][FILE:context.cc][LINE:1655]

rtModelBindStream execute failed, reason=[tsfw param illegal][FUNC:FuncErrorReason][FILE:error_message_manage.cc][LINE:49]

Stream unbind failed, stream is not binded with specified model, stream_id=3, specified model_id=0![FUNC:UnbindStream][FILE:model.cc][LINE:366]

Unbind stream failed, model_id=0, stream_id=3, retCode=0x7030005.[FUNC:ModelUnbindStream][FILE:context.cc][LINE:1671]

rtModelUnbindStream execute failed, reason=[stream is not in model][FUNC:FuncErrorReason][FILE:error_message_manage.cc][LINE:49]

----------------------------------------------------

- Framework Error Message: (For framework developers)

----------------------------------------------------

Call rt api rtModelBindStream failed, ret: 507001

----------------------------------------------------

- C++ Call Stack: (For framework developers)

----------------------------------------------------

mindspore/ccsrc/plugin/device/ascend/hal/hardware/ascend_kernel_executor.cc:208 PreprocessBeforeRunGraph

mindspore/ccsrc/plugin/device/ascend/hal/device/ge_runtime/runtime_model.cc:91 InitStream

A more informative error would be helpful here.

1.10.0 is an old version that may result in error on B4

we command to try 2.3 or later version if you want to test net training

您好,由于问题单没有回复暂时关闭,如您仍有疑问,可以反馈下具体信息,并将ISSUE状态修改为WIP,我们这边会进一步跟踪,谢谢

登录 后才可以发表评论